Category: Software

-

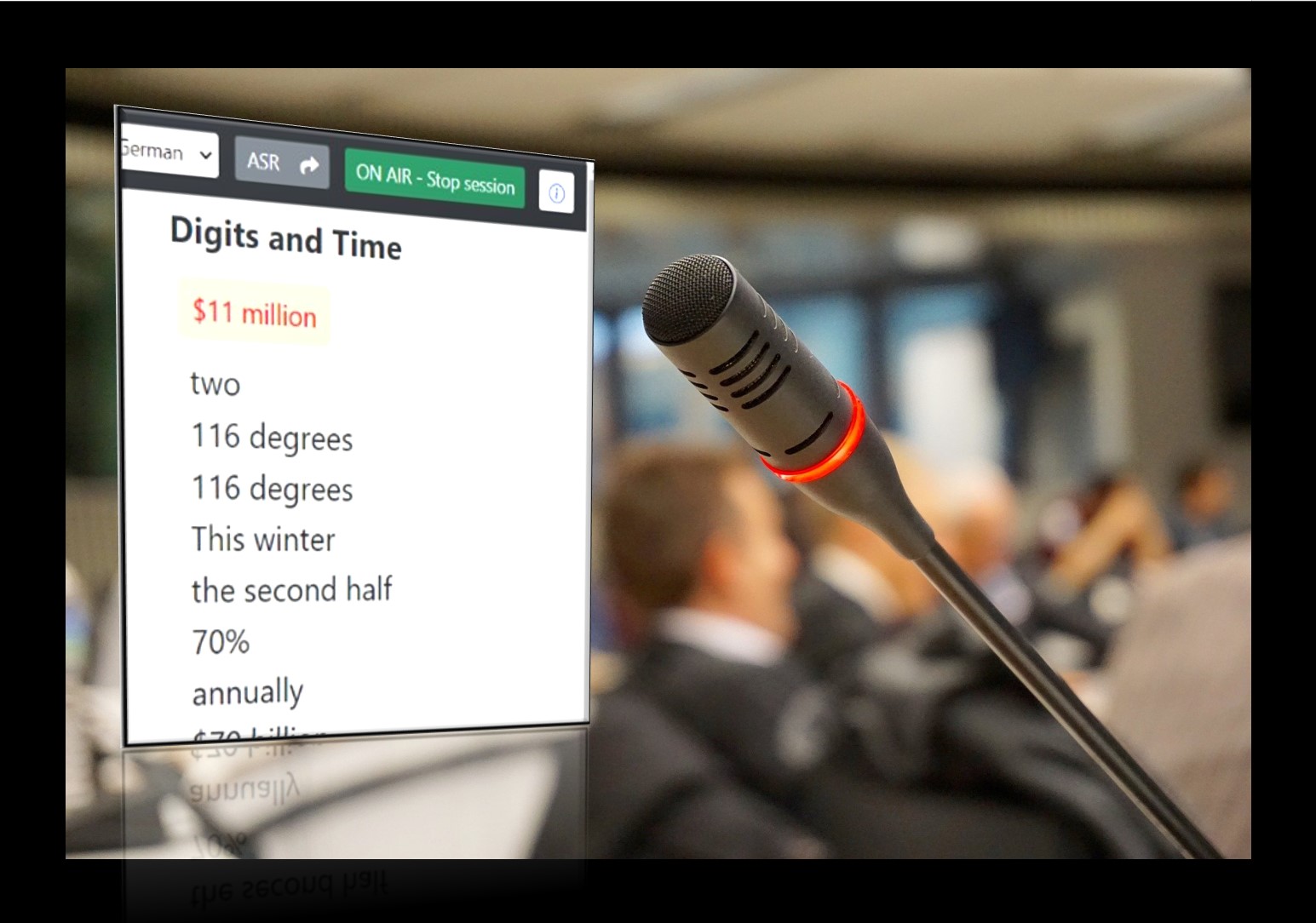

Live prompting CAI tools – a market snapshot

In the aftermath of our inspiring AIIC AI Day in Rome, I felt that an overview of all live-prompting CAI (Computer-Assisted Interpreting) tools might be very much appreciated by many colleagues. So here we go – these are the tools providing live prompting of terms, numbers, names or complete transcripts in the booth during simultaneous…

-

The InterpretBank Artificial Boothmate in action | guest article by Benjamin Gross

As interpreters, we’re used to being team players. We don’t like to work alone – whether that be in a booth on-site or remotely from our home offices. Whatever we do, we can trust our favourite people – our booth mates. So what if we could have an additional mate in our booth? A mate…

-

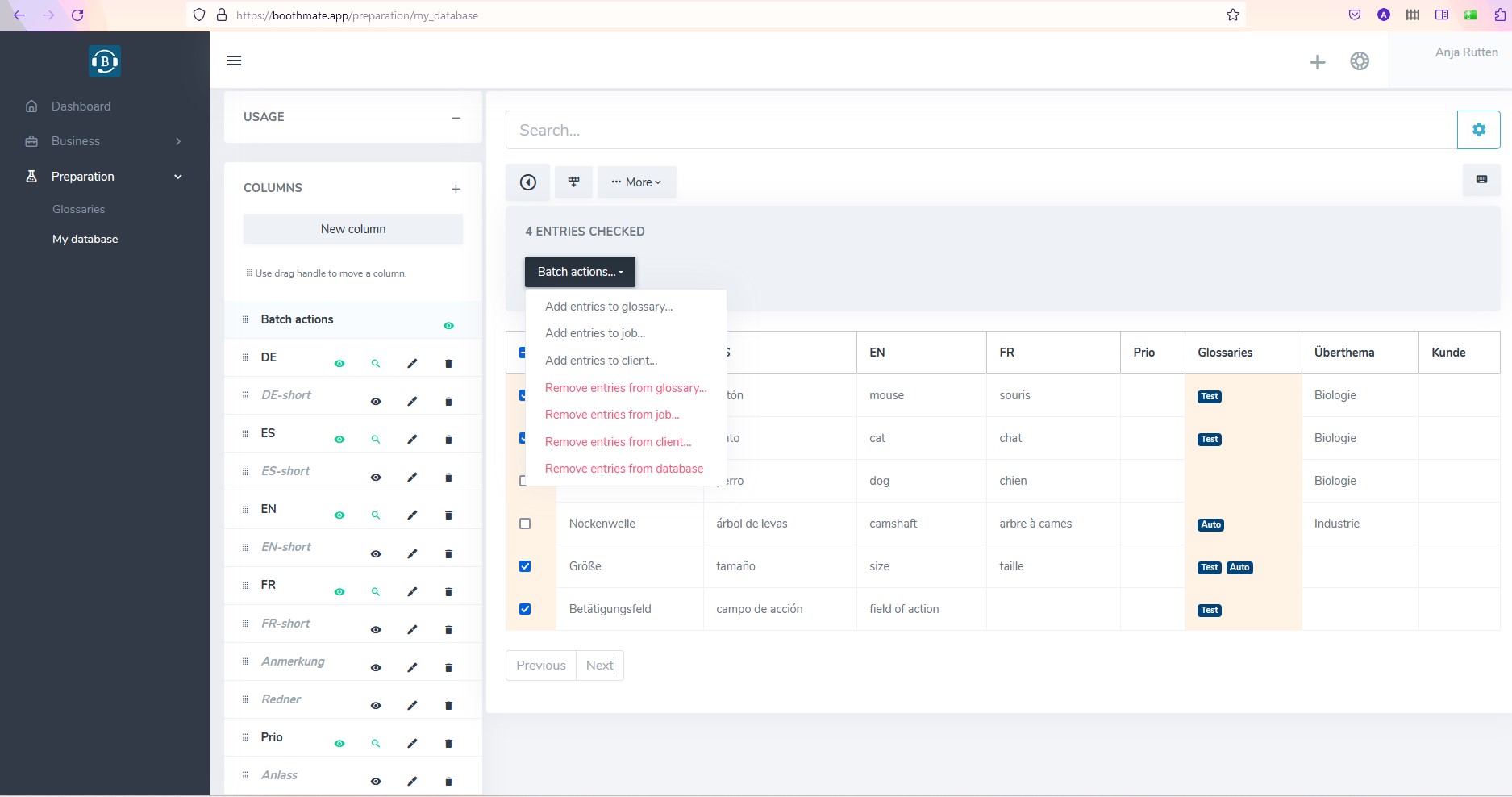

New (beta) version of Interpretershelp with lots of great new features to discover ️- now available at boothmate.app

InterpretersHelp is a very straightforward browser-based terminology and job management application that allows for secure online collaboration. It is great for keeping your terminology up to date on all devices and sharing it with colleagues. It has been around for about ten years now, reason enough for Benoit Werner, co-founder and technical brain of this…

-

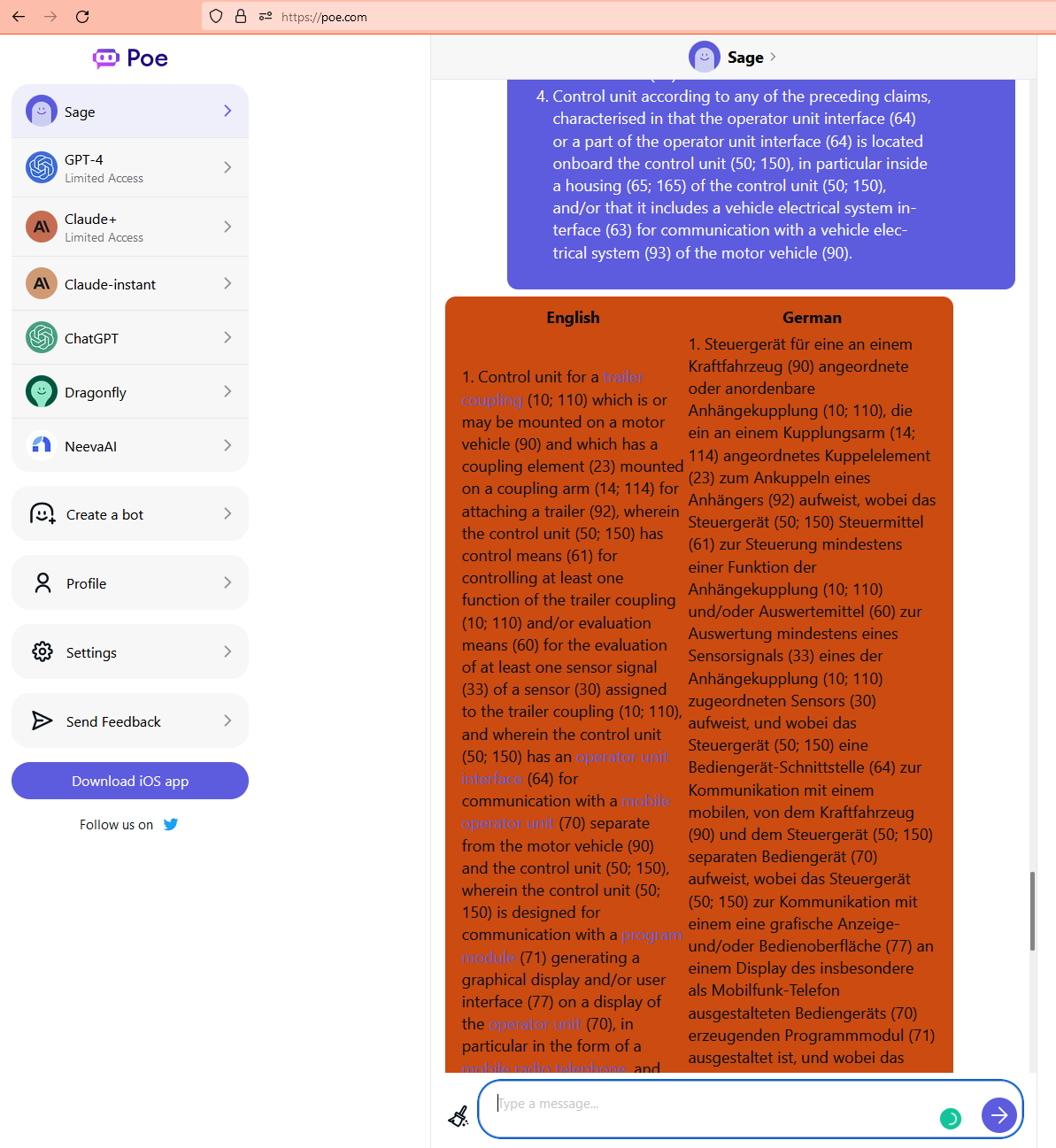

How to tell a chatbot like ChatGPT or Sage to align texts in different languages in a table (and besides get a summary )

If you have the same text in several languages and you want the different versions neatly arranged in a table with the sentences in the different language versions next to each other, a chatbot like ChatGPT may be able to help. Especially when texts come in pdf format, just copying and pasting them can already…

-

Use ChatGPT, DeepL & Co. to boost your conference preparation

In this article I would like to show you how I like to use DeepL, Google Translate, Microsoft Translator, and ChatGPT as a conference interpreter, especially in conference preparation. You will also see how to use GT4T to combine the different options. I did some testing for this year’s Innovation in Interpreting Summit and I…

-

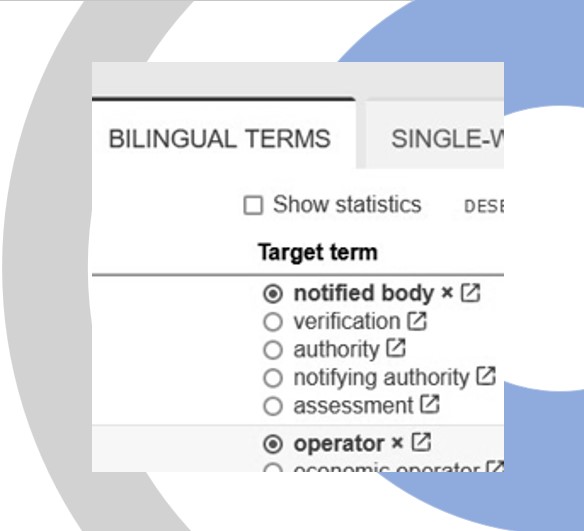

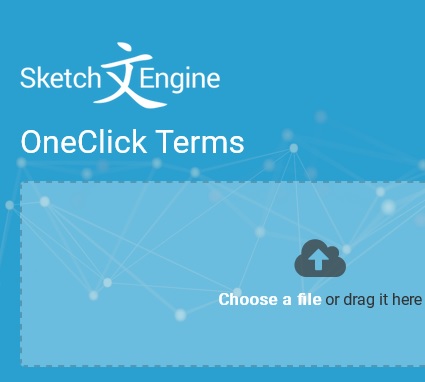

Automatic bilingual term extraction with OneClickTerms by SketchEngine

When I wrote about this great terminology extraction tool OneClickTerms back in 2017 I was already quite enthusiastic about how useful it was for last-minute conference preparation. But the one thing I didn’t mention back then (or maybe it wasn’t available yet) was that OneClickTerms does not only extract terminology from monolingual documents, but it…

-

The new IATE interpreters’ view – what’s in it for EU meeting preparation?

The EU’s terminology database has been around for quite some time – the project was launched back in 1999 and has been available to the public since 2007. Recently, it has been revamped, and an “interpreters’ view” has been added. All EU interpreters, staff and freelancers alike, have access to it, and it is tailored…

-

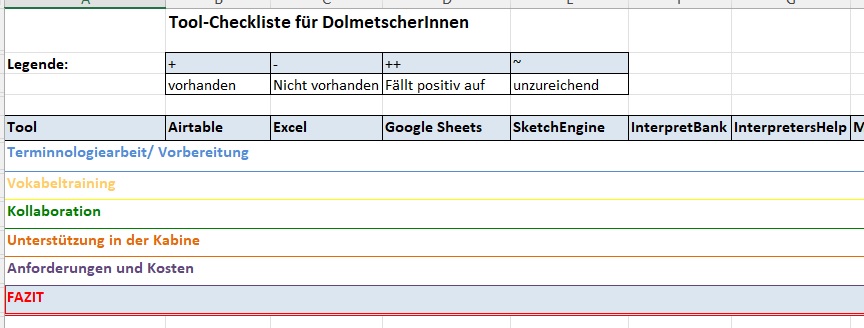

Checklist table of useful terminology tools for interpreters

Do you sometimes wonder which tools best serve your purpose as a conference interpreter? Well, two of my pollitos did, and they came up with this very handy and detailed checklist. It shows all sorts of terminology, spreadsheet, and extraction tools with their pros and cons. Thanks a lot to Eliana Cajas Balcazar y Vanessa…

-

Document management tools – the nerdy, the geeky, and the classic

When it comes to handling heaps of documents on one single screen (or two or three), I just can’t decide which document management program best serves my purpose. So I thought it might be useful to give a short overview of my top three, OneNote, LiquidText, and PDF XChange Editor, with their pros and cons…

-

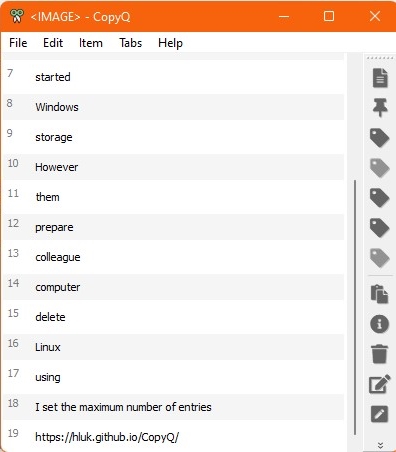

Use your clipboard for easier glossary building

When I prepare for a conference, I like to add expressions to a list and then look up equivalents, sort and categorise them later. But the other day I was so fed up with hopping back and forth between my glossary file and the pdfs, webpages etc. I was browsing in preparation (plus my colleague…

-

My hands-, eyes- and ears-on experience with SmarTerp, including a short interview with the UI designer

Last December, I was among the lucky ones who could beta-test SmarTerp, a CAI (Computer-Aided Interpreting) tool in development that offers speech-to-text support to simultaneous interpreters in the booth. For those who haven’t heard of SmarTerp before, this is what it is all about: SmarTerp comprises two components: 1. An RSI (Remote Simultaneous Interpreting) platform,…

-

Here comes my first-AI written blog post

I was feeling a bit lazy today, so I thought I might give Artificial Intelligence a try. And here we go, this is my first blog post written by an AI writing assistant: How to Use the Best Interpreting Technology Translation and interpreting services are crucial for business success. If you’re a business owner, it…

-

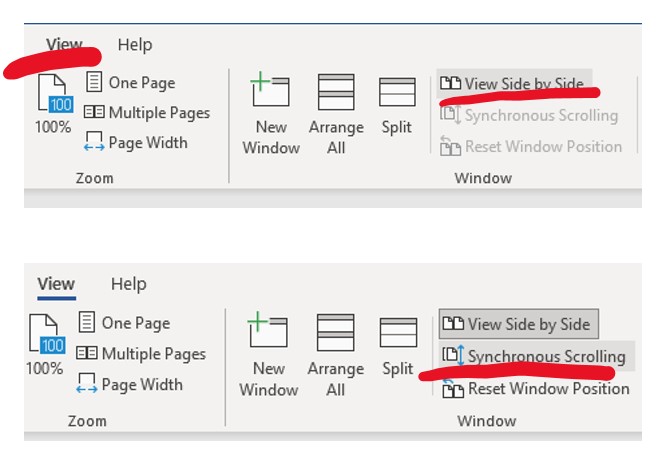

Synchronous Scrolling in Microsoft Word

Whilst it usually is fancy new apps designed for the benefit of interpreters (or everybody) that get my attention, this time it was boring old Microsoft Word I got excited about. Maybe I am the last one to discover the synchronous scrolling function, but I liked it so much that I wanted to share it.…

-

How to Make CAI Tools Work for You – a Guest Article by Bianca Prandi

After conducting research and providing training on Computer-Assisted Interpreting (CAI) for the past 6 years, I feel quite confident in affirming that there are three indisputable truths about CAI tools: they can potentially provide a lot of advantages, do more harm than good if not used strategically, and most interpreters know very little about them.…

-

Remote Simultaneous Interpreting … muss das denn sein – und geht das überhaupt?!

In einem von AIIC und VKD gemeinsam organisierten Workshop unter der Regie von Klaus Ziegler hatten wir Mitte Mai in Hamburg die Gelegenheit, diese Fragen ausführlich zu ergründen. In einem Coworkingspace wurde in einer Gruppe organisierender Dolmetscher zwei Tage lang gelernt, diskutiert und in einem Dolmetschhub das Remote Simultaneous Interpreting über die cloud-basierte Simultandolmetsch-Software Kudo…

-

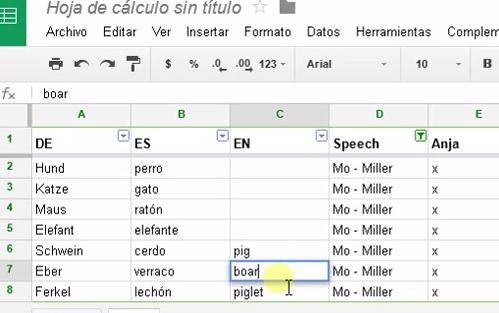

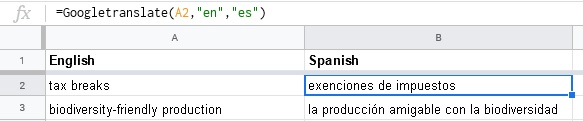

How to Build Your Self-Translating Glossary in Google Sheets

I am certainly not saying that Google can create your glossaries for you when preparing for a technical conference on African wildlife or nanotubes. But if you know your languages well enough to tell a bad translation from a good one, it may still be a time-saver. Especially for those words you don’t use every…

-

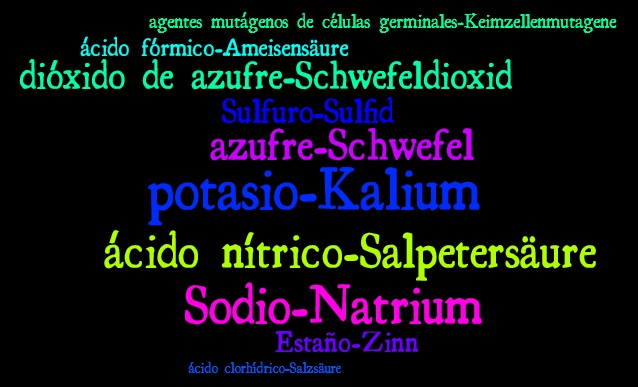

Word Clouds – much nicer than Word Lists

I have been wondering for quite some time if word lists are the best thing I can come up with as a visual support in the booth. They are not exactly appealing to the eye, after all … So I started to play around with word cloud generators a bit to see if they are…

-

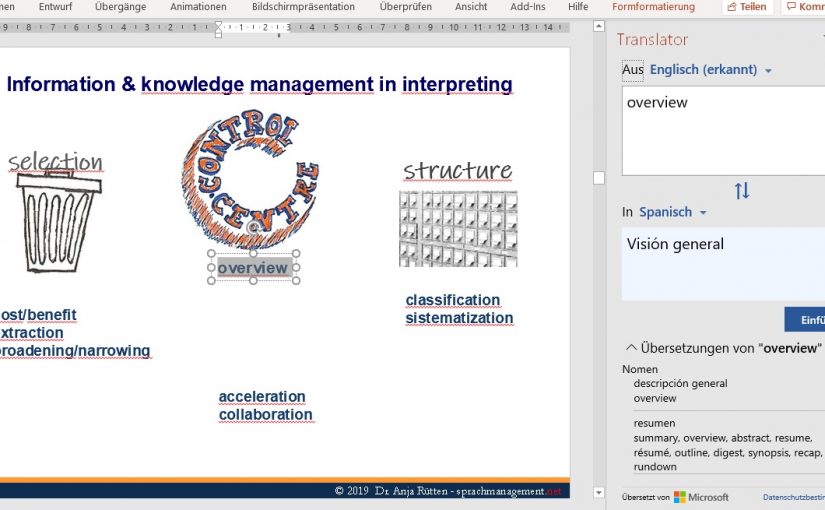

Microsoft Office Translator – Can it be of any help in the booth?

When it comes to Computer-Aided Interpreting (CAI), a question widely discussed in the interpreting community is whether information being provided automatically by a computer in the booth could be helpful for simultaneous interpreters or if would rather be a distraction. Or to put it differently: Would the cognitive load of simultaneous interpreting be increased by…

-

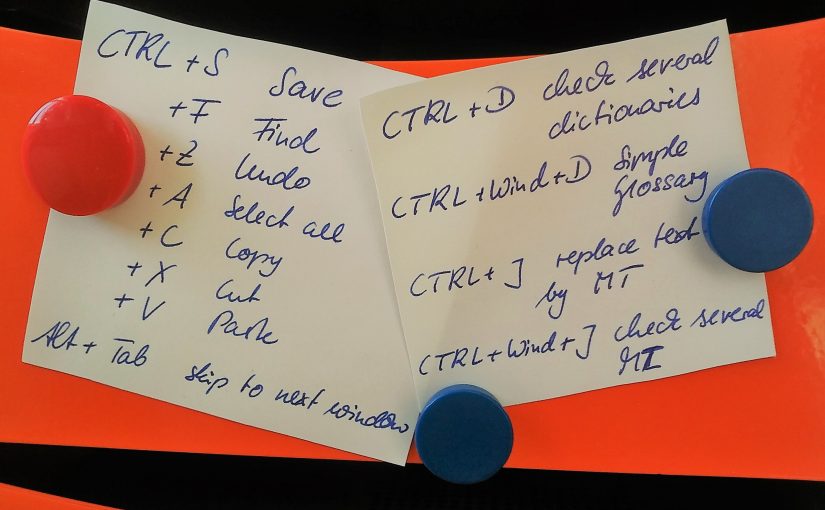

You love keyboard shortcuts? Meet GT4T!

GT4T – key shortcuts made for translators and interpreters (for German and Spanish, scroll down) If you asked me, everyone should learn key shortcuts at school together with their ABC. Once memorised, they are so convenient to use … unlike buttons on the screen, you just feeeeel them without having to look. It seems like…

-

Speechpool, InterpretimeBank & InterpretersHelp – the Perfect Trio for Deliberate Practice in Conference Interpreting

After testing the practice module of InterpretersHelp last month, the whole practice thing got me hooked. Whilst InterpretersHelp gives us the technical means to record our interpretation together with the original and receive feedback from peers, there are two more platforms out there which cover further aspects of the practice workflow: InterpretimeBank and Speechpool. To…

-

Cleopatra: an App for Automating Symbols for Consecutive Interpreting Note-Taking – Guest Article by Lourdes de la Torre Salceda

+++ For Spanish scroll down +++ The perfect symbol has just come to mind! I’ve been racking my brain for ages and I got it, finally! I’ve been inspired! But where should I write it down, now that I’m sunbathing on the beach! Has something similar ever occurred to you? As a millennial the first…

-

New Term Extraction Features in InterpretBank and InterpretersHelp – Thumbs up!

Extracting terminology from preparatory texts into a term database seems to be the hot topic of the moment, judging by what the two most active and innovative CAI (computer-assisted interpreting) tools, InterpretBank and InterpretersHelp, are working on at the moment. So while I am still waiting to become a Windows beta tester of Intragloss, the…

-

InterpretersHelp’s new Practice Module – Great Peer-Reviewing Tool for Students and Grownup Interpreters alike

I have been wondering for quite a while now why peer feedback plays such a small role in the professional lives of conference interpreters. Whatwith AIIC relying on peer review as its only admission criterion, why not follow the logic and have some kind of a routine in place to reflect upon our performance every…

-

Flashterm revisited. A guest article by Anne Berres

+++ für deutsche Fassung bitte runterscrollen +++ One of everything, please! Have you ever wished there was a terminology management system (TMS) that would provide all the functions you are looking for and prepare for the conference largely automatically? Wouldn’t that be splendid? You’d just have to type in the event’s title and the…

-

Extract Terminology in No Time | OneClick Terms | Ruckzuck Terminologie extrahieren

[for German scroll down] What do you do when you receive 100 pages to read five minutes before the conference starts? Right, you throw the text into a machine and get out a list of technical terms that give you a rough overview of what it’s all about. Now finally, it looks like this dream…

-

Team Glossaries | Tips & Tricks von Magda und Anja | DfD 2017

Aus unserer Kurzdemo zum Thema “Teamglossare in GoogleSheets” bei “Dolmetscher für Dolmetscher” am 15. September 2017 in Bonn findet Ihr hier ein paar Screenshots und Kurznotizen. Detaillierte Gedanken zu gemeinsamer Glossararbeit in der Cloud findet Ihr in diesem Blogbeitrag. Magdalena Lindner-Juhnke und Anja Rütten Grundsätzliches zur Zusammenarbeit Einladung zum Online-Glossar Erwartungshaltung auf beiden Seiten “Share…

-

Paperless Preparation at International Organisations – an Interview with Maha El-Metwally

Maha El-Metwally has recently written a master’s thesis at the University of Geneva on preparation for conferences of international organisations using tablets. She is a freelance conference interpreter for Arabic A, English B, French and Dutch C domiciled in Birmingham. How come you know so much about the current preparation practice of conference interpreters at so…

-

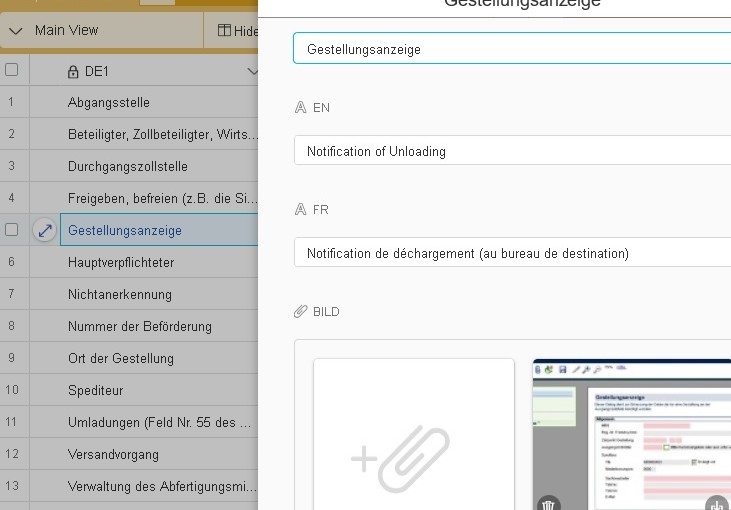

InterpretBank 4 review

InterpretBank by Claudio Fantinuoli, one of the pioneers of terminology tools for conference interpreters (or CAI tools), already before the new release was full to the brim with useful functions and settings that hardly any other tool offers. It was already presented in one of the first articles of this blog, back in 2014. So now…

-

Zeit sparen bei der Videovorbereitung | How to save time when preparing video speeches

Videos als Vorbereitungsmaterial für eine Konferenz haben unzählige Vorteile. Der einzige Nachteil: Sie sind ein Zeitfresser. Man kann nicht wie bei einem schriftlichen Text das Ganze überfliegen und wichtige Stellen vertiefen, markieren und hineinkritzeln. Zum Glück hat Alex Drechsel sich in einem Blogbeitrag dazu Gedanken gemacht und ein paar Tools ausgegraben, die dem Elend ein…

-

Can computers outperform human interpreters?

Unlike many people in the translation industry, I like to imagine that one day computers will be able to interpret simultaneously between two languages just as well as or better than human interpreters do, what with artificial neuronal neurons and neural networks’ pattern-based learning. After all, once hardware capacity allows for it, an artificial neural…

-

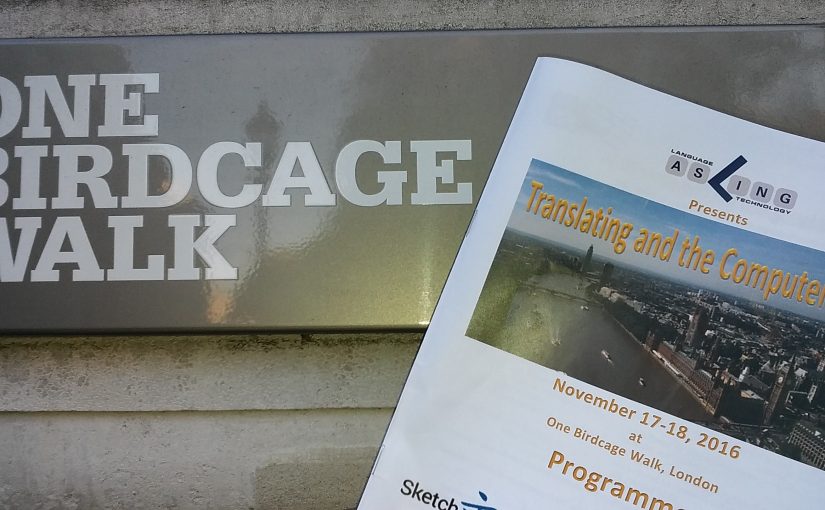

Impressions from Translating and the Computer 38

The 38th ‘Translating and the Computer’ conference in London has just finished, and, as always, I take home a lot of inspiration. Here are my personal highlights: Sketch Engine, a language corpus management and query system, offers loads of useful functions for conference preparation, like web-based (actually Bing-based) corpus-building, term extraction (the extraction results come…

-

Booth-friendly terminology management: Glossarmanager.de

Believe it or not, only a few weeks ago I came across just another booth-friendly terminology management program (or rather it was kindly brought to my attention by a student when I talked about the subject at Heidelberg University). It has been around since 2008 and completely escaped my attention. So I am all the…

-

Why not listen to football commentary in several languages? | Fußballspiele mehrsprachig verfolgen

+++ for English, see below +++ para español, aún más abajo +++ Ich weiß nicht, wie es Euch ergeht, aber wenn ich im Fernsehen ein Fußballspiel verfolge, frage ich mich mitunter, was jetzt wohl der Kommentator der anderen Mannschaft bzw. Nationalität dazu gerade sagt. Die Idee, (alternative) Fußballkommentare über das Internet zu streamen, hat sich…

-

Airtable.com – a great replacement for Google Sheets | tolle Alternative zu Google Sheets

+++ for English see below +++ Mit der Terminologieverwaltung meiner Träume muss man alles können: Daten teilen, auf allen Geräten nutzen und online wie offline darauf zugreifen (wie mit Interpreters’ Help/Boothmate für Mac oder auch Google Sheets), möglichst unbedenklich Firmenterminologie und Hintergrundinfos des Kunden dort speichern (wie bei Interpreters’ Help), sortieren und filtern (wie in…

-

Dictation Software instead of Term Extraction? | Diktiersoftware als Termextraktion für Dolmetscher?

+++ for English see below +++ Als neulich mein Arzt bei unserem Beratungsgespräch munter seine Gedanken dem Computer diktierte, anstatt zu tippen, kam mir die Frage in den Sinn: “Warum mache ich das eigentlich nicht?” Es folgte eine kurze Fachsimpelei zum Thema Diktierprogramme, und kaum zu Hause, musste ich das natürlich auch gleich ausprobieren. Das…

-

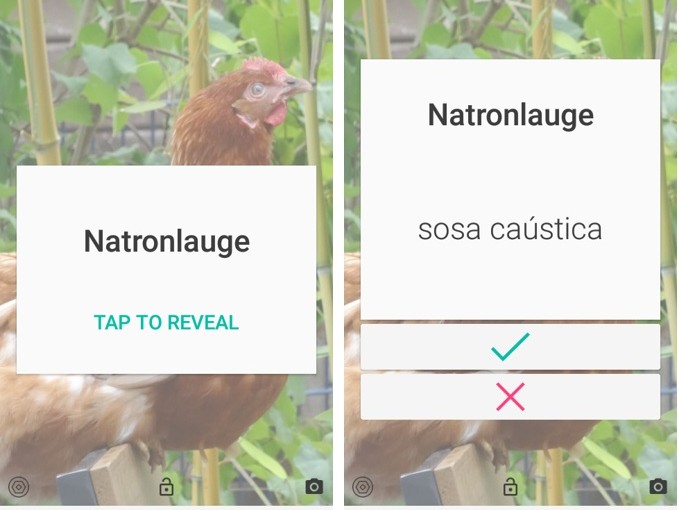

GetSEMPER.com – Charmant-penetranter Vokabeltrainer | Persistant, though charming: your personal vocab trainer

“Aktivierung von Schlüsselterminologie”, “Memor(is)ierung” oder “Vokabelpauken” – egal, wie man es nennt: Eine gewisse Basisausrüstung an Fachterminologie muss einfach ins Hirn. Hierzu kann man sich Listen ausdrucken, Karteikarten schreiben oder eine Reihe von Apps und Programmen nutzen (Anki, Phase 6, Langenscheidt und Pons wurden mir bei einer Spontanumfrage unter Kollegen genannt, auch die InterpretBank bietet…

-

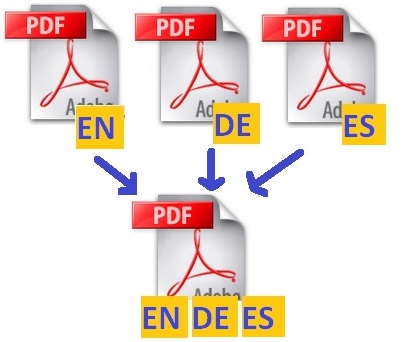

How to build one nice multilingual file from several PDFs | Aus zwei (PDFs) mach eins – übersichtliche mehrsprachige PDFs erstellen | Cómo crear un archivo PDF multilingüe

+++ for English see below +++ para español, aun más abajo +++ Wir kennen ihn alle: Den über hundert Seiten langen Geschäftsbericht, vollgepackt mit Grafiken, Tabellen und wertvollen Informationen, und das – Halleluja! – nicht nur im Original, sondern auch noch in 1a-Übersetzung(en) . Einen besseren Fundus für die Dolmetschvorbereitung kann man sich kaum vorstellen.…

-

Booth-friendly terminology management: Intragloss – the missing link between texts and glossaries|die Brücke zwischen Text und Glossar

+++ for English see below +++ Wer schon immer genervt war von der ständigen Wechselei zwischen Redetexten/Präsentationsfolien einerseits und dem Glossar andererseits, der hat jetzt allen Grund zu jubilieren: Dan Kenig und Daniel Pohoryles aus Paris haben mit Intragloss eine Software entwickelt, in der man direkt aus dem Text Termini in sein Glossar befördern kann…

-

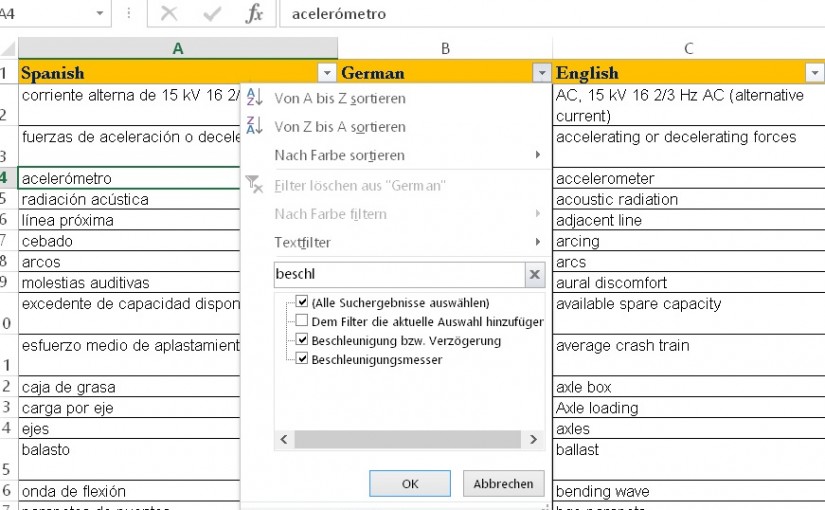

MS-Excel and MS-Access – are they any good for the booth? | Taugen MS-Excel und MS-Access für die Dolmetschkabine?

Es gibt zwar eine ganze Menge Programme, die genau auf die Bedürfnisse von Konferenzdolmetschern zugeschnitten sind (etwa die Schnellsuche in der Kabine oder Strukturen für die effiziente Einsatzvorbereitung), aber eine ganze Reihe von Kollegen setzt dennoch eher auf Feld-Wald-und-Wiesen-Lösungen aus der MS-Office-Kiste. Abgesehen von Word – hier enthalte ich mich jeden Kommentars hinsichtlich dessen Tauglichkeit…