Last December, I was among the lucky ones who could beta-test SmarTerp, a CAI (Computer-Aided Interpreting) tool in development that offers speech-to-text support to simultaneous interpreters in the booth.

For those who haven’t heard of SmarTerp before, this is what it is all about:

SmarTerp comprises two components:

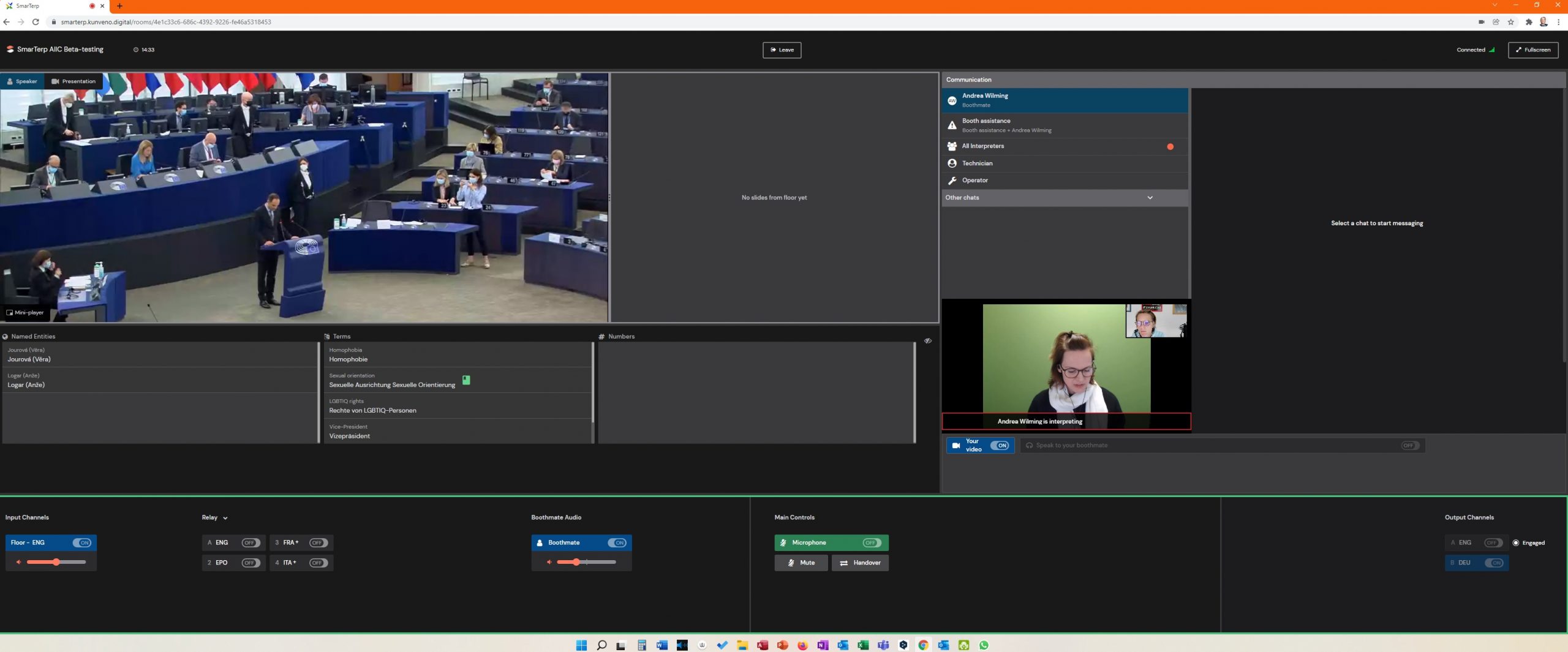

1. An RSI (Remote Simultaneous Interpreting) platform, aiming to create the ideal conditions for a high-quality RSI service through (a) ISO-compliant audio and video quality, (b) communication options with all actors involved in the assignment (technician, operator, conference moderator, other booths—via chat—and the boothmate— via chat and a direct audio and video channel), (c) an RSI console allowing interpreters to perform all key actions required by this interpreting mode (change input and output channel, control their microphone, listen to their boothmate, pick up relay).

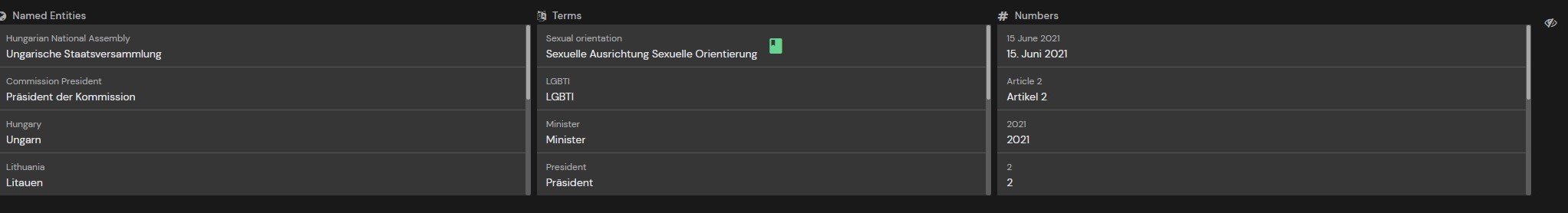

2. An ASR (Automatic Speech Recognition)- and AI-powered CAI tool, supporting interpreters in the interpretation of common ‘problem triggers’ (named entities, acronyms and specialised terms, and numbers). The Beta version of SmarTerp is the outcome of a close collaboration with practitioners, who have taken an active part in shaping all phases of the design and development of the solution.

So for the beta-testing, Andrea Wilming and I were assigned to staff the German booth, and we were told to prepare a glossary of about 70 to 100 entries about the rights of LGBTIQ citizens in the EU. So to do our reputation justice, we started this endeavour with meticulous preparation. We created a team glossary in Google Sheets and started to cram all sorts of vocab into it (like basically we were asked to do). But then halfway through the task, we started to wonder how to make our glossary really “SmarTerp-proof”.

Questions came up like: If I put LGBTIQ into my glossary, and someone says LGBT, will the system recognise it as a partial match? No it won’t. Susana Rodríguez explained to us that short entries increase the probability of them being recognized by the system (which is counter-intuitive to our tendency to have longer entries with a bit of context). So we ended up creating four alternative entries for the different variants, and found it reassuring that we would be able to read from the screen which of the acronyms was being used.

| LGBT | LGBT |

| LGBTQ | LGBTQ |

| LGBTIG | LGBTIG |

| LGBTIQ+ | LGBTIQ+ |

Next question that came up during preparation: If there are two terms, e.g. Gleichbehandlung and Diskriminierungsverbot in German and only one equivalent – non-discrimination – in English, will SmarTerp show me both German terms when the English term is used by the speaker, so that I can choose the one I like best? Yes, it will. But again, if you want it to recognise both Gleichbehandlung and Diskriminierungsverbot in a German speech, you will need to create two different entries for synonyms of the same term.

| Gleichbehandlung | non-discrimination |

| Diskriminierungsverbot | non-discrimination |

| nichtdiskriminierend | non-discriminatory |

| diskriminierungsfrei | non-discriminatory |

| inklusiv | non-discriminatory |

So my lesson learned number 1 is: Beware how you prepare! Building a glossary for yourself is different from building it for (a boothmate or) a machine.

But the best was still to come: After having completed a self-paced, very efficient and well-structured online training module, we were sent to the virtual booth along with our colleagues from the French, Spanish and Italian booths.

We were given enough time in advance to have a look around and play with the different functions of the user interface. My first impression: It was well organised in functional groups so that it was very easy to find our way around. I personally would have preferred white or a light colour for the background, which I find easier to read—or even to be able to assign different background colours to different functional areas for ease of orientation. But maybe the “dark mode” has its advantages in terms of visual perception? Indeed it has, as Icíar Villamayor (see interview below) explained to me. It is thought to cause less eye fatigue than having to look at a white/bright screen for hours, which may even cause headache. It is also easier to highlight important elements on a dark background so that it is less distracting to search for them.

SmarTerp offers all sorts of different communication channels among interpreters: You can chat with your boothmate, the whole team, the technician or the operator (interestingly, many of us spontaneously missed emojis :-)), and there is a dedicated video and audio connection between boothmates. These are functions that had been widely asked for in the interpreting community. Now we had the whole range of options at our fingertips, we were all absolutely delighted, although some found the plethora of options rather overwhelming. But then the individual preferences as to which elements were crucial were far from homogenous either. I personally, for example, can do perfectly well without a booth video connection and handover function as long as there is a good chat function and I can listen to my boothmate working. Others, however, found the different chat options too many, or wanted to be able to monitor/see the different chat channels at the same time. It was also discussed whether the video window was too big or too small, if the support area should be rearranged, and if it might be possible at all for the different functional areas to be rearranged according to personal preferences … Which then made me wonder: who is the brain behind this thoughtfully designed user interface? Luckily I was introduced to Icíar Villamayor, front-end developer and graphic designer from Madrid, and I had the chance to ask her some questions.

Question: What did you find special about designing the user interface of SmarTerp?

Icíar: Everything was special! Until the project started I had never thought about simultaneous interpreting, I barely even knew what it was about and what the interpreter’s workflow was. You’d expect anything involving languages to be at least a bit intricate, especially on the interpreter’s side, but the amount of people that take part in the interpreting process’ “backstage” was something I never expected.

It was an ambitious project from the beginning. The main goal was to simulate an in-person interpreting setting and this was something that hadn’t already been done in any of the other simultaneous interpreting apps available.

For me, one of the most special parts of Smarterp was the challenge, in terms of information architecture, it presented. We had a lot of information on the screen — features, text, tiny icons and interactive buttons — and a very focused individual who couldn’t be distracted from their task. Being able to define some hierarchy in those items and organize them in blocks to ease the interpreter’s job was a challenge but also a learning experience.

Question: What did you find difficult to implement?

Icíar: The difficulty wasn’t in the implementation really. It is rather the kind of app that poses the challenge. Usually when you start to create a new platform, the first thing you do is defining the target population. Then you can check which other applications or platforms there are and find out about usability patterns and the ways the existing solutions solve the problems that we will most probably encounter ourselves in the course of the development process. For example, if we wanted to create an app to share videos, we would have a clear idea that our target users would be between 13 and 35 years old and would have some experience with social networks. We know that these users know that by double clicking on a post they can “like” it, that by swiping down they can scroll, etc. We could build on these usability patterns that our users know so well.

At Smarterp we are dealing with a population segment that is not divided by age. Thus we have a user group with a huge spectrum in terms of how they handle technology. Add to this the fact that the only real usability reference we have are “traditional” hardware consoles, and here you are with a rather challenging project.

It is true that we have taken references from videoconferencing applications for the video module, as well as references from instant messaging applications for the communication module, but our users are not like any other user. The level of concentration required by simultaneous interpreting forces us to rethink the use of every little feature we want to provide.

Question: Was there anything you – as a non-interpreter – liked/did not like about SmarTerp?

Icíar: I really liked the whole concept from the very beginning of the project. It’s interesting to discover new professions and be in direct contact with professionals who constantly give you feedback. I wouldn’t think of it in terms of liking or not liking but more of “We really thought this was perfect but it turns out it isn’t THAT usable?” That’s where we’re currently at with the communication module (the chat), which is also one of the most important features. From the beginning, it was difficult to establish who each user could write to via chat and how we could make the messages easier to manage for active interpreters and this is still a current issue. We’ve received some feedback from a user testing carried out by Francesca Maria Frittella and Susana Rodriguez and, even though we can’t follow word by word every interpreter’s suggestion, the results show we still got some work to do.

Do you think it will be possible to accommodate yet another section on the screen (provided it is a big one of, say, 34 inches) to display meeting documents and key terminology?

Icíar: Yeah! We’ve never ruled out adding more features to the interpreter’s interface. If there’s enough space it is definitely something that can be done. I guess we would need to interview interpreters to find out what the preferred feature would be. I can’t make any promises though.

My lesson number 2: Interpreters are hard to please. And big screens are a good idea.

The interpreting experience itself – the debate of an EP committee of about 90 minutes on LGBTIQ rights – was rather exhausting: Many speeches were read out at full speed and almost un-interpretable. But this was the whole point of the exercise, after all: to see if, under real-life worst-case maximum-cognitive-load conditions, we were able to make use of the almost-real time transcripts of numbers and named entities and the display of terminology.

And indeed, this AI-based live support, the innovative heart piece of SmarTerp, did really do its job! The numbers and names came just in time to be helpful, to my mind – I don’t think I would have been faster in scribbling them down or typing them into the chat for my colleague. There were several moments where I checked the support section for numbers and two or three named entities (like OLAF and SECA), mainly because I wasn’t sure I had heard them correctly. It feels absolutely reassuring to know that there is a permanent flow of potentially useful information coming in, just in case you might need it. On the other hand, of course, this avalanche of information can be overwhelming, as you are bound to need only a tiny fraction of it. But I think that I would be able to just ignore the constant flow of incoming words and numbers as long as I don’t need it, and just resort to it when in need, just like you ignore your human colleague scribbling away next to you in the booth as long as you don’t need their help (and your digital companion will not resent you for not even looking at it). One thing I am not sure about yet is the reliability of information offered by the AI. You can never be sure if the numbers and names are correct, and I noted the occasional mistranslation in the terms provided. A great plus is that SmarTerp marks reliable terms (i.e. those coming from the glossaries provided by the interpreters themselves) with a green symbol, so I suppose that it is a question of internalising it.

Lesson number 3: SmarTerp – think of it as an extremely helpful and untiring boothmate who is never ever offended if you don’t accept the support offered.

So what’s to come? I do of course have my personal wishlist to SmarTerp: I would love to have a section for sharing meeting documents and displaying them permanently along with some crucial information like key terminology and names. And at the end of a meeting, I would like to be able to download a sort of log file for follow-up purposes, e.g. extracting important terminology for future meetings on the same subject. I imagine this might also come in handy during the meeting in case I wanted to scroll back and check a term I had just noticed from the corner of my eye or hadn’t had the capacity to check while I was interpreting but wanted to do so once my boothmate had taken over. Here again, the cognitive load (writing down stuff for later reference) could be eased by the system.

Because this is what CAI is all about – reducing the cognitive load of simultaneous interpreting and leaving capacities to use our professional sense of judgment instead of scribbling numbers and searching terminology. On this note, I am rather optimistic that SmarTerp will help us rethink our role and work as interpreters for the better.

About the author

Anja Rütten is a freelance conference interpreter for German (A), Spanish (B), English (C), and French (C) based in Düsseldorf, Germany. She has specialised in knowledge management since the mid-1990s.

Leave a Reply